DeBerta is new the King

Explore how DeBERTa revolutionizes NLP with its innovative Disentangled Attention Mechanism and Enhanced Mask Decoder, surpassing previous models like BERT and RoBERTa in performance and setting a new benchmark in the field

Explore how DeBERTa revolutionizes NLP with its innovative Disentangled Attention Mechanism and Enhanced Mask Decoder, surpassing previous models like BERT and RoBERTa in performance and setting a new benchmark in the field

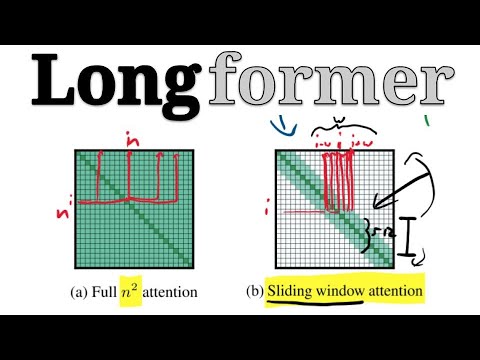

Discover how Longformer overcomes the limitations of traditional Transformer models by introducing an attention mechanism that scales linearly with sequence length, enabling efficient processing of long document